前几天,OpenAI的创始人奥特曼被董事会解雇,几天后就又回来了,反手就把董事会给重组了。

微软说要给离开OpenAI的700个员工每人1000万美金,这样都买不走这些员工。

这件事情非常戏剧化。

从Sam Altman和Ilya Sutkever的互动中可以看出,两个人并没有什么深仇大恨,Ilya反而像是一个智力极高,情商略低的科学家,被董事会其他成员蛊惑一起投票赶走奥特曼,却没想到后果会这么严重,也陷入了反思。

现在,奥特曼带着员工都回来了。却没有任何OpenAI的员工提起,为什么Ilya要投票赶走奥特曼。这似乎是OpenAI不敢公布的话题。

今天路透社的报道挺有意思的。这里翻译出来给大家乐呵乐呵:

OpenAI researchers warned board of AI breakthrough ahead of CEO ouster, sources say

消息人士称,OpenAI 研究人员在首席执行官被罢免之前警告董事会 AI 突破

Nov 22 (Reuters) - Ahead of OpenAI CEO Sam Altman’s four days in exile, several staff researchers wrote a letter to the board of directors warning of a powerful artificial intelligence discovery that they said could threaten humanity, two people familiar with the matter told Reuters.

11月22日(路透社) - 两名知情人士告诉路透社,在OpenAI首席执行官山姆·奥特曼(Sam Altman)流亡四天之前,几名研究人员给董事会写了一封信,警告他们称可能威胁人类的强大人工智能发现。

The previously unreported letter and AI algorithm were key developments before the board's ouster of Altman, the poster child of generative AI, the two sources said. Prior to his triumphant return late Tuesday, more than 700 employees had threatened to quit and join backer Microsoft (MSFT.O) in solidarity with their fired leader.

两位消息人士称,这封以前未报道的信和人工智能算法是董事会罢免生成式人工智能的典型代表阿尔特曼之前的关键发展。在他周二晚些时候凯旋归来之前,已有700多名员工威胁要辞职并加入支持者Microsoft(MSFT.O)声援他们被解雇的领导人。

The sources cited the letter as one factor among a longer list of grievances by the board leading to Altman's firing, among which were concerns over commercializing advances before understanding the consequences. Reuters was unable to review a copy of the letter. The staff who wrote the letter did not respond to requests for comment.

消息人士称,这封信是董事会导致阿尔特曼被解雇的一长串不满之一,其中包括在了解后果之前对商业化预付款的担忧。路透社无法审查这封信的副本。写这封信的工作人员没有回应置评请求。

After being contacted by Reuters, OpenAI, which declined to comment, acknowledged in an internal message to staffers a project called Q* and a letter to the board before the weekend's events, one of the people said. An OpenAI spokesperson said that the message, sent by long-time executive Mira Murati, alerted staff to certain media stories without commenting on their accuracy.

其中一位知情人士说,在与路透社联系后,拒绝置评的OpenAI在给员工的内部消息中承认了一个名为Q*的项目,并在周末的活动前给董事会写了一封信。OpenAI的一位发言人表示,这条消息由长期高管米拉·穆拉蒂(Mira Murati)发送,提醒员工注意某些媒体报道,但没有对其准确性发表评论。

Some at OpenAI believe Q* (pronounced Q-Star) could be a breakthrough in the startup's search for what's known as artificial general intelligence (AGI), one of the people told Reuters. OpenAI defines AGI as autonomous systems that surpass humans in most economically valuable tasks.

其中一位知情人士告诉路透社,OpenAI的一些人认为Q*(发音为Q-Star)可能是这家初创公司寻找所谓的通用人工智能(AGI)的一个突破。OpenAI 将 AGI 定义为在最具经济价值的任务中超越人类的自主系统。

Given vast computing resources, the new model was able to solve certain mathematical problems, the person said on condition of anonymity because the individual was not authorized to speak on behalf of the company. Though only performing math on the level of grade-school students, acing such tests made researchers very optimistic about Q*’s future success, the source said.

鉴于巨大的计算资源,新模型能够解决某些数学问题,该人士不愿透露姓名,因为该人没有被授权代表公司发言。消息人士称,虽然只在小学生的水平上进行数学测试,但通过这样的测试,研究人员对Q*未来的成功非常乐观。

Reuters could not independently verify the capabilities of Q claimed by the researchers.

路透社无法独立验证研究人员声称的Q的能力。

Researchers consider math to be a frontier of generative AI development. Currently, generative AI is good at writing and language translation by statistically predicting the next word, and answers to the same question can vary widely. But conquering the ability to do math — where there is only one right answer — implies AI would have greater reasoning capabilities resembling human intelligence. This could be applied to novel scientific research, for instance, AI researchers believe.

研究人员认为数学是生成式人工智能开发的前沿领域。目前,生成式人工智能擅长通过统计预测下一个单词来写作和语言翻译,并且同一问题的答案可能会有很大差异。但是,征服数学的能力——只有一个正确答案——意味着人工智能将具有更强的推理能力,类似于人类智能。例如,人工智能研究人员认为,这可以应用于新的科学研究。

Unlike a calculator that can solve a limited number of operations, AGI can generalize, learn and comprehend.

与可以解决有限数量运算的计算器不同,AGI 可以概括、学习和理解。

In their letter to the board, researchers flagged AI’s prowess and potential danger, the sources said without specifying the exact safety concerns noted in the letter. There has long been discussion among computer scientists about the danger posed by highly intelligent machines, for instance if they might decide that the destruction of humanity was in their interest.

消息人士称,在给董事会的信中,研究人员标记了人工智能的实力和潜在危险,但没有具体说明信中提到的确切安全问题。长期以来,计算机科学家一直在讨论高度智能机器带来的危险,例如,他们是否可能决定毁灭人类符合他们的利益。

Researchers have also flagged work by an "AI scientist" team, the existence of which multiple sources confirmed. The group, formed by combining earlier "Code Gen" and "Math Gen" teams, was exploring how to optimize existing AI models to improve their reasoning and eventually perform scientific work, one of the people said.

研究人员还标记了一个“人工智能科学家”团队的工作,多个消息来源证实了该团队的存在。其中一位知情人士说,该小组由早期的“Code Gen”和“Math Gen”团队组成,正在探索如何优化现有的人工智能模型,以提高其推理能力,并最终进行科学工作。

Altman led efforts to make ChatGPT one of the fastest growing software applications in history and drew investment - and computing resources - necessary from Microsoft to get closer to AGI.

Altman领导了使ChatGPT成为历史上增长最快的软件应用程序之一的努力,并从Microsoft获得了接近AGI所需的投资和计算资源。

In addition to announcing a slew of new tools in a demonstration this month, Altman last week teased at a summit of world leaders in San Francisco that he believed major advances were in sight.

除了在本月的一次演示中宣布了一系列新工具外,奥特曼上周在旧金山举行的世界领导人峰会上开玩笑说,他认为重大进展即将到来。

"Four times now in the history of OpenAI, the most recent time was just in the last couple weeks, I've gotten to be in the room, when we sort of push the veil of ignorance back and the frontier of discovery forward, and getting to do that is the professional honor of a lifetime," he said at the Asia-Pacific Economic Cooperation summit.

他在亚太经合组织峰会上说:“在OpenAI的历史上,现在已经有四次了,最近一次是在过去的几周里,当我们把无知的面纱推回去,把发现的前沿向前推进时,我得在房间里,这样做是一生的职业荣誉。

A day later, the board fired Altman.

一天后,董事会解雇了奥特曼。

对于上面这篇报道,OpenAI拒绝置评,

也就马斯克表达了他的担忧:

除此外,还有个有意思的事情:

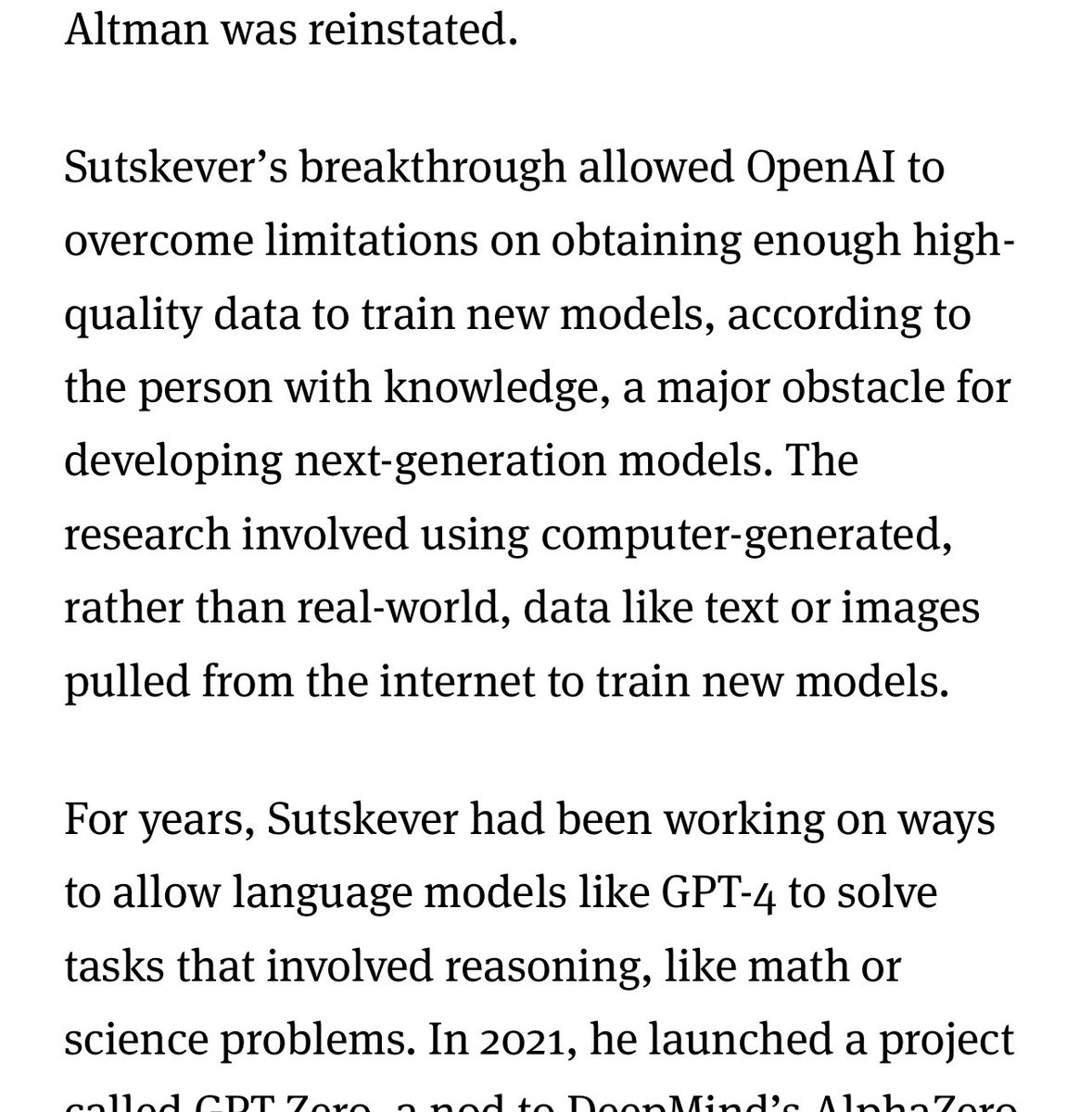

这是来自于theinformation的报道,

Ilya Sutskever 的突破使 OpenAI 能够克服在获取足够高质量数据以训练新模型上的限制,这是一个知情人士所说的发展下一代模型的一个主要障碍。研究涉及使用计算机生成的数据,而不是从互联网上提取的真实世界数据,如文本或图像来训练新模型。

多年来,Sutskever 一直在研究让诸如 GPT-4 之类的语言模型解决涉及推理的任务,如数学或科学问题。2021年,他启动了一个名为 GPT Zero 的项目,其灵感来自 DeepMind 的 AlphaZero。

此前Ilya在一次采访中说到:

“Without going into details I'll just say the Data Limit can be overcome..."

意思就是说,GPT把互联网里的知识学遍了,没什么好学的了,就自己生成内容来给自己学习。Ilya在技术上实现了突破!

就像牛顿在研究行星运动和引力的过程中,发现需要一种新的数学工具来描述这些现象,就发明了微积分。高手进阶到了从学习知识,再到发明新知识的地步。

AI的进化可以脱离程序员提供的数据喂养,而是自产自食,就像是长大的孩子,自力更生,这已经算是拥有轻微意识的超级人工智能了。

最后,以上是综合各家报道,也不能全信,更不必焦虑,先图个乐呵吧。

微信扫一扫打赏

微信扫一扫打赏

支付宝扫一扫打赏

支付宝扫一扫打赏